Demonstrating Data-to-Knowledge Pipelines for Connecting Production Sites in the World Wide Lab - Replication Package#

Documentation: https://franka-wwl-demonstrator-iop-workstreams-ws-a3-55c043b308e72e51f.pages.git-ce.rwth-aachen.de/

Code Repository: https://git-ce.rwth-aachen.de/leon-gorissen/demonstrating-data-to-knowledge-pipelines

Live Data at Coscine: https://coscine.rwth-aachen.de/p/iop-ws-a.iii-fer-wwl-demo-80937714/

Live Data request form: https://coscine.rwth-aachen.de/pid/?pid=21.11102%2f5f19998a-47ca-4801-b446-729a4ac140e3

Publications#

Arxiv preprint: https://arxiv.org/abs/2412.12231

Data and Source Code: https://publications.rwth-aachen.de/record/1008373

Published Benchmark Models Dataset: http://doi.org/10.18154/RWTH-2025-00450

Published Trajectory Dataset: http://doi.org/10.18154/RWTH-2025-00466

Published Source Code: http://doi.org/10.18154/RWTH-2025-00519

Acknowledgement#

Funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Germanys Excellence Strategy – EXC-2023 Internet of Production – 390621612

Data availablity#

Important

Use your orcid email adress in the data request form.

Live Data is available up on request. Please use the provided Data request form.

Concept#

This project aims to explore the following aspects:

Data-to-knowledge pipelines within the World Wide Lab

Automated research data storage and management, especially meta data

Continous multi-instance dynamics learning for Franka Emika Robot

This project extends the previous work presented by Schneider et al. 2024.

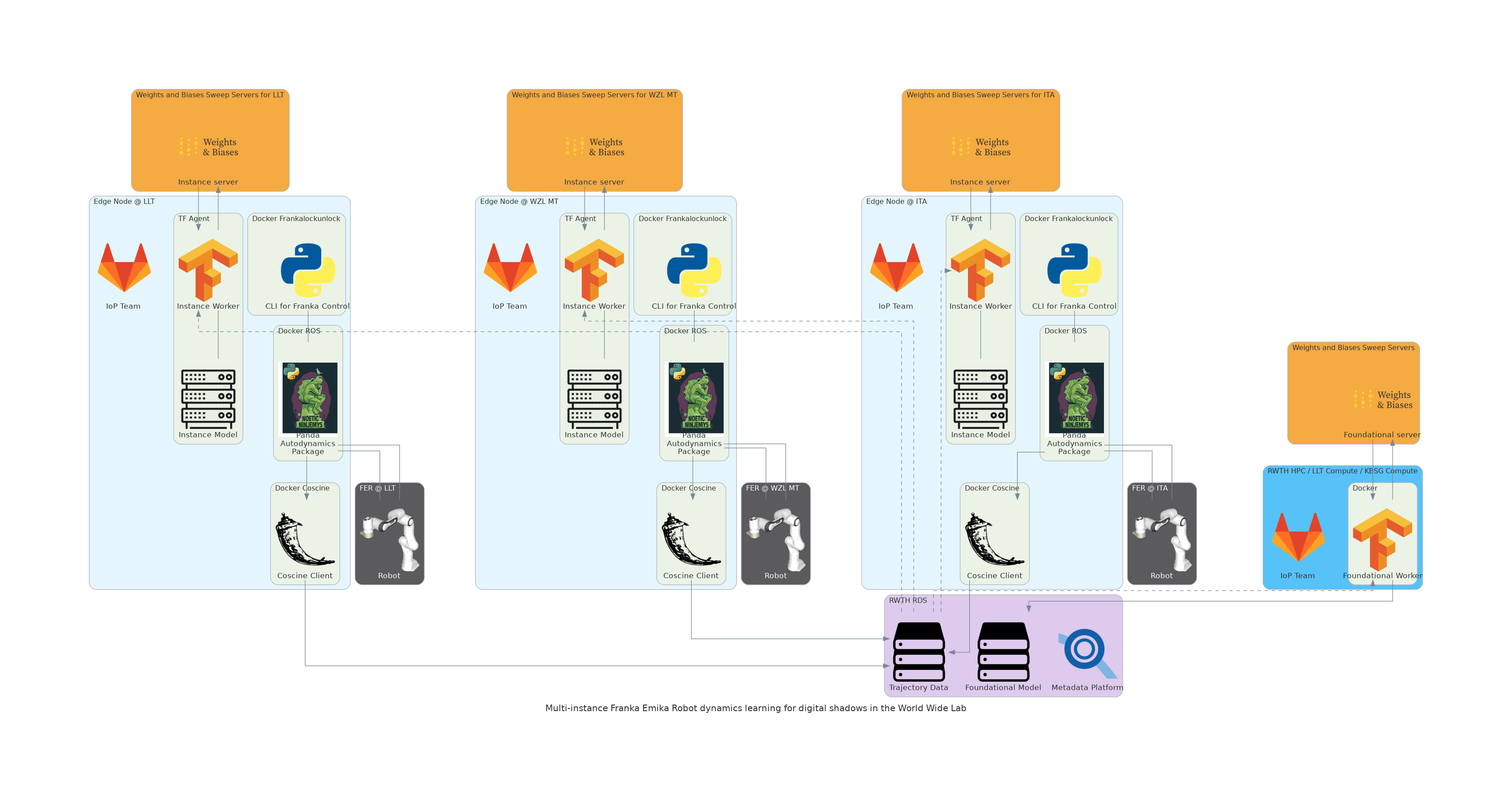

Three instances of Franka Emika Robots are present within the RWTH Aachen University Cluster of Excellence Internet of Production.To generate meaningful knowledge from data gather on these three instances–situtated at the Chair for Laser Technology (LLT), Chair for Machine Tools (WZL MT) and the Institute for Textile Technology (ITA)–automated data storage and management is required. Thus, automated data generation procedures have been implemented and are conducted on each robot instances. Data is automatically tagged with meta data on pushed to NRW RDS by means of RWTH Aachen University meta data platform coscine. This platform both provides the generated data (Trajectory Data resource) as well as imporant metadata such as the source code used for data generation and the universal unique identfier of the robot instance. This data is used to train an inverse dynamics model of the robotic systems. Agents registered to a weight and biases sweep server continously get send new model hyperparameter sets from the sweep server, pull up to date data from NRW RDS and push well performing models back to NRW RDS (Inverse Dynamics Models resource). Trained models are part of the AI-Toolbox of Workstream A.III and may be used at the participating institutions fully embracing the Data-to-knowledge pipeline demonstrated within the World Wide Lab.

Setup#

This app is contrainerized.

Install docker and docker compose according to sources.

Important

Make sure to foll the post-installation steps.

Ensure that incomming traffic is not blocked. Check both ufw and iptables.

FCI requires a RT-Kernel patch. Set it up following their documentation.

This setup uses docker and docker-compose. In addition we use x11 forwarding for gui support. Allow users to connect to you x11 client.

xhost +SI:localuser:$USER

Next set up the repo.

git clone https://git-ce.rwth-aachen.de/iop/workstreams/ws.a3/franka_wwl_demonstrator

cd franka_wwl_demonstrator

Make sure to set up your environment variables according to the documentation of each service.

Usage#

Data Generation#

First, export all required environment variables:

export DATATYPE="your_robot_trajectory_data_type_either_'command'_or_'attained'"

export DATASET_TYPE="'TRAIN'_or_'TEST'"

export FRANKA_GRIPPER="your_robot_gripper_status_either_'true'_or_'false'"

export MOVEMENT="true"

export COSCINE_API_TOKEN="your_api_token"

export ROBOT_UUID="your_robot_UUID"

export ROBOT_DESCRIPTION="your_robot_description"

export ROBOT_MANUFACTURER="your_robot_manufacturer"

export ROBOT_MODEL="your_robot_model"

export FRANKA_PASSWORD="your_password_here"

export FRANKA_IP="your_IP_here"

export FRANKA_USERNAME="your_username_here"

To start the data generation pipeline run:

docker compose up -d --build ros coscine frankalockunlock

To stop the pipeline, in a second terminal run:

docker compose down

For detailed / individual usage follow the corresponding readme.

Foundation Model Training#

First, export all required environment variables:

export COSCINE_API_TOKEN="your_api_token"

export WANDB_API_TOKEN="your_api_token"

export WANDB_ENTITY="your_wandb_username"

export WANDB_SWEEP_ID="your_sweep_id"

To run a foundation model training agent run:

docker compose up -d dynamics_learning

Instance Model Training#

Note

Content will follow shortly.

To run an instance training agent run: